Authors: Richard Klein, Tilburg University; Christine Vitiello, University of Florida; Kate A. Ratliff, University of Florida

Recent efforts to replicate findings in psychology have been disappointing. There is a general concern among many in the field that a large number of these null replications are because the original findings are false positives, the result of misinterpreting random noise in data as a true pattern or effect.

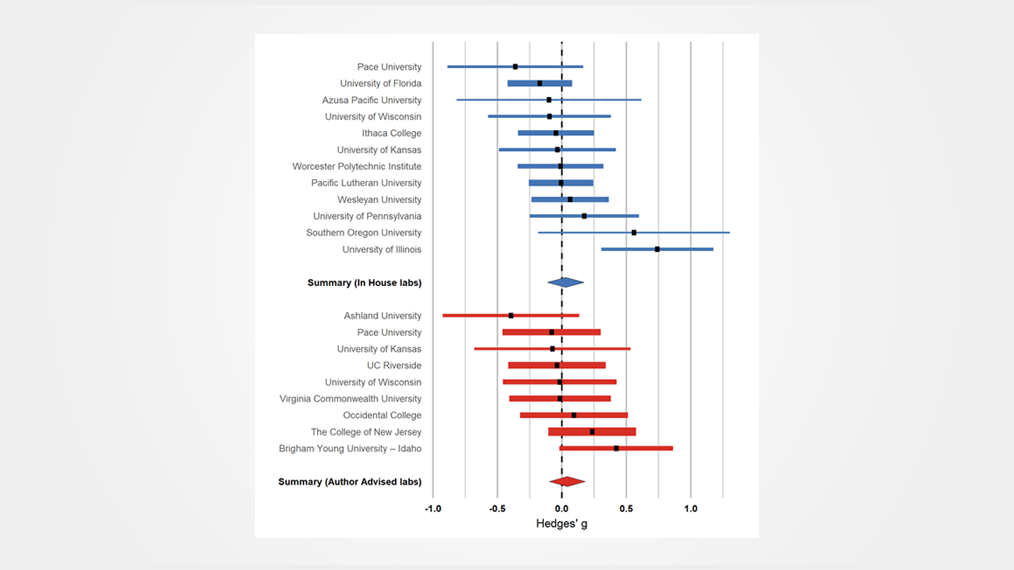

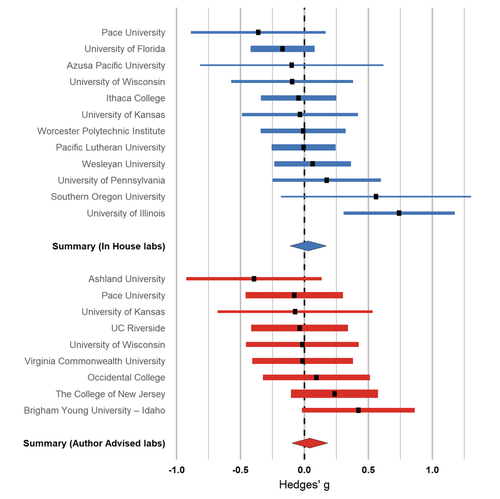

Plot summarizing results from each data collection site. Length of each bar indicates the 95% confidence interval, and thickness is scaled by the number of participants at that lab. Blue shading indicates an In House site, red shading indicates an Author Advised site. Diamonds indicate the aggregated result across that subset of labs. Plots for other exclusion sets are available on the OSF page (https://osf.io/xtg4u/).

But, failures to replicate are inherently ambiguous and can result from any number of contextual or procedural factors. Aside from the possibility that the original is a false positive, it may instead be the case that some aspect of the original procedure does not generalize to other contexts or populations, or the procedure may have produced an effect at one point in time but those conditions no longer exist. Or, the phenomena may not be sufficiently understood so as to predict when it will and will not occur (the so-called "hidden moderators" explanation).

Another explanation — often made informally — is that replicators simply lack the necessary expertise to conduct the replication properly. Maybe they botch the implementation of the study or miss critical theoretical considerations that, if corrected, would have led to successful replication. The current study was designed to test this question of researcher expertise by comparing results generated from a research protocol developed in consultation with the original authors to results generated from research protocols designed by replicators with little or no particular expertise in the specific research area. This study is the fourth in our line of "Many Labs" projects, in which we replicate the same findings across many labs around the world to investigate some aspect of replicability.

To look at the effects of original author involvement on replication, we first had to identify a target finding to replicate. Our goal was a finding that was likely to be generally replicable, but that might have substantial variation in replicability due to procedural details (e.g. a finding with strong support but that is thought to require “tricks of the trade” that non-experts might not know about). Most importantly, we had to find key authors or known experts who were willing to help us develop the materials. These goals often conflicted with one another.

We ultimately settled on Terror Management Theory (TMT) as a focus for our efforts. TMT broadly states that a major problem for humans is that we are aware of the inevitability of our own death; thus, we have built-in psychological mechanisms to shield us from being preoccupied with this thought. In consultation with those experts most associated with TMT, we chose Study 1 of Greenberg et al. (1994) for replication. The key finding was that, compared to a control group, U.S. participants who reflected on their own death were higher in worldview defense; that is, they reported a greater preference for an essay writer adopting a pro-U.S. argument than an essay writer adopting an anti-U.S. argument.

We recruited 21 labs across the U.S. to participate in the project. A randomly assigned half of these labs were told which study to replicate, but were prohibited from seeking expert advice (“In House” labs). The remaining half of the labs all followed a set procedure based on the original article, and incorporating modifications, advice, and informal tips gleaned from extensive back-and-forth with multiple original authors (“Author Advised” labs).* In all, the labs collected data from 2,200+ participants.

The goal was to compare the results from labs designing their own replication, essentially from scratch using the published method section, with the labs benefitting from expert guidance. One might expect that the latter labs would have a greater likelihood of replicating the mortality salience effect, or would yield larger effect sizes. However, contrary to our expectation, we found no differences between the In House and Author Advised labs because neither group successfully replicated the mortality salience effect. Across confirmatory and exploratory analyses we found little to no support for the effect of mortality salience on worldview defense at all.

In many respects, this was the worst possible outcome — if there is no effect then we can't really test the metascientific questions about researcher expertise that inspired the project in the first place. Instead, this project ends up being a meaningful datapoint for TMT itself. Despite our best efforts, and a high-powered, multi-lab investigation, we were unable to demonstrate an effect of mortality salience on worldview defense in a highly prototypical TMT design. This does not mean that the effect is not real, but it certainly raises doubts about the robustness of the effect. An ironic possibility is that our methods did not successfully capture the exact fine-grained expertise that we were trying to investigate. However, that itself would be an important finding — ideally, a researcher should be able to replicate a paradigm solely based on information provided in the article or other readily available sources. So, the fact that we were unable to do so despite consulting with original authors and enlisting 21 labs, all of which were highly trained in psychology methods is problematic.

From our perspective, a convincing demonstration of basic mortality salience effects is now necessary to have confidence in this area moving forward. It is indeed possible that mortality salience only influences worldview defense during certain political climates or among catastrophic events (e.g. national terrorist attacks), or other factors explain this failed replication. A robust Registered Report-style study, where outcomes are predicted and analyses are specified in advance, would serve as a critical orienting datapoint to allow these questions to be explored.

Ultimately, because we failed to replicate the mortality salience effect, we cannot speak to whether (or the degree to which) original author involvement improves replication attempts.** Replication is a necessary but messy part of the scientific process, and as psychologists continue replication efforts it remains critical to understand the factors that influence replication success. And, it remains critical to question, and empirically test, our intuitions and assumptions about what might matter.

*At various points we refer to “original authors”. We had extensive communication with several authors of the Greenberg et al., 1994 piece, and others who have published TMT studies. However, that does not mean that all original authors endorsed each of these choices, or still agree with them today. We don’t want to put words in anyone’s mouth, and, indeed, at least one original author expressly indicated that they would not run the study given the timing of the data collection — September 2016 to May 2017, the period leading up to and following the election of Donald Trump as President of the United States. We took steps to address that concern, but none of this means the original authors “endorse” the work.

**Interested readers should also keep an eye out for Many Labs 5 which looks at similar issues

Greenberg, J., Pyszczynski, T., Solomon, S., Simon, L., & Breus, M. (1994). Role of consciousness and accessibility of death-related thoughts in mortality salience effects. Journal of Personality and Social Psychology, 67(4), 627-637.

6218 Georgia Avenue NW, Suite #1, Unit 3189

Washington, DC 20011

Email: contact@cos.io

Unless otherwise noted, this site is licensed under a Creative Commons Attribution 4.0 International (CC BY 4.0) License.

Responsible stewards of your support

COS has earned top recognition from Charity Navigator and Candid (formerly GuideStar) for our financial transparency and accountability to our mission. COS and the OSF were also awarded SOC2 accreditation in 2023 after an independent assessment of our security and procedures by the American Institute of CPAs (AICPA).

We invite all of our sponsors, partners, and members of the community to learn more about how our organization operates, our impact, our financial performance, and our nonprofit status.