Note: This post was updated on November 7, 2018 to reflect the changes recently implemented by Wiley, described here. The original version of this post can be found on the Wayback Machine.

Transparency is essential for scientific progress. Access to underlying data and materials allows us to make progress through new discoveries and to better evaluate reported findings, which increases trust in science. However, there are challenges to changing norms of scientific practice. Culture change is a slow process because of inertia and the fear of unintended consequences.

One barrier to change that we encounter as we advocate to journals for more data sharing is an editor's uncertainty about how their publisher will react to such a change. Will they help implement that policy? Will they discourage it because of uncertainty about how it might affect submission numbers or citation rates? With uncertainty, inaction seems to be easier.

One way for a publisher to overcome that barrier for individual journals is to establish data sharing policies that are available to all of their journals. That directly signals that the publisher will be ready to support editorial policy change. In fact, 2017-2018 saw most major publishers doing just that. This has resulted in a significant number of journals now having policies that can increase transparency. The Transparency and Openness Promotion (TOP) Guidelines provide guidance and template language to use in author instructions. Publishers that adopt TOP policies or their equivalent signal support for any of these actions.

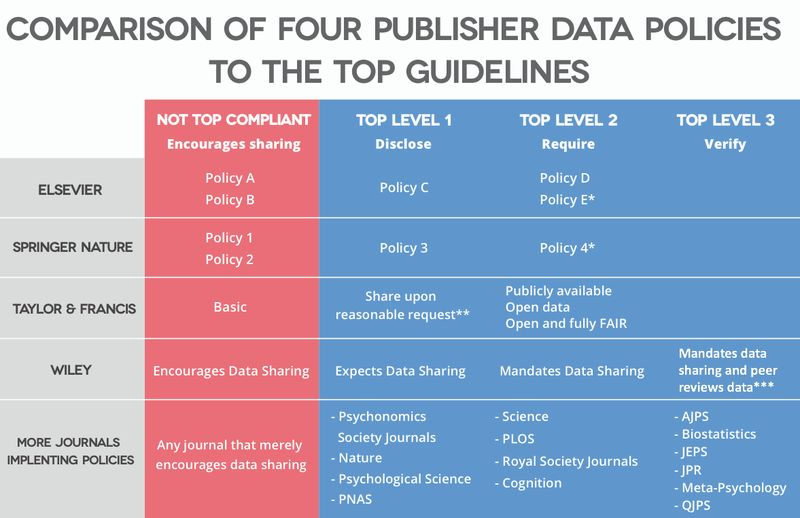

TOP includes eight policies for publishers or funders to use to increase transparency. They include data transparency, materials and code transparency, design and citation standards, preregistration, and replication policies. For simplicity, this post will focus just on the Data Transparency policy. TOP can be implemented in one of three levels of increasing rigor:

Level 1, Disclosure. Articles must state whether or not data underlying reported results are available and, if so, how to access them.

Level 2, Mandate. Article must share data underlying reported results in a trusted repository. If data cannot be shared for ethical or legal constraints, authors must state this and provide as much data as can be reasonably shared.

Level 3, Verify that shared data are reproducible. Shared data must be made available to a third party to verify that they can be used to replicate findings reported in the article.

Similarly, publishers that create their own tiered data sharing policies make it clear to their community that these actions are all possible. These policies are fantastic tools for their editors and authors. By comparing the four policies here, we can see how they relate to one another, and discover areas of overlap or gap.

Elsevier, Springer Nature, Taylor & Francis, and Wiley have all recently adopted tiered data sharing policies that make improvements in supporting transparency easier for the journals that they publish. Each shares some characteristics and rely on similar tiers: from encouragement to share data to increasingly strong mandates to do so.

The first tier of policies at each publisher are all consistent with standard practice: an encouragement to share data or mandate that data be made available upon request. Importantly, TOP starts above this status quo. Such policies have been repeatedly demonstrated to be ineffective and are not compliant with TOP. Furthemore, fulfilling an expectation to share upon request is of course only possible if the data are preserved and if the author chooses to share. It is a mandate that is nearly impossible to enforce. This standard seems increasingly dated in a scientific community where even some of the oldest and most prestigious institutions are expecting Open Science to be the new normal.

These “Available upon request” policies are still the default expectation for many journals. The tiered data sharing policies provided by Elsevier (policy option A and B), Springer Nature (policy type 1 and 2), Taylor and Francis (Basic), and Wiley (Encourages) all start with this “Level 0” of the TOP Guidelines.

Moving beyond the status quo are requirements to disclose whether or not data are available and, if so, the state where. While this still leaves room for a data statement with “available upon request,” it does mandate an action: disclosure. Such policies are consistent with TOP’s Level 1. Elsevier’s Option C, Springer Nature’s Type 3, and Taylor and Francis’ "Share Upon Request" (which does also have a disclosure requirement) and Wiley's "Expects Data Sharing" fall into this category.

Some policies require data sharing for datasets in communities where norms already exist. For example, there is a strong culture of posting biomedical data such as protein and DNA sequences or proteomics data, and so requiring that simply reflects current expectations. For all other data underlying the results reported in an article there might be a disclosure requirement until norms within the community change. See Nature's policy for this type of approach.

The heart of the TOP Guidelines is Level 2, which requires that data be made openly available in a repository as a condition of publication. Exceptions are permitted for legal or ethical constraints. TOP provides specific guidance and definitions on what “data” includes, and what to do when raw data cannot be made available.

Elsevier’s Option D and E*, Springer Nature’s Policy 4*, Taylor & Francis’ three open policies, and Wiley’s Mandate all comply with Level 2 of TOP. There are several points worth mentioning here. The first is the number of policies within the Taylor & Francis category. The Publicly Available policy permits data shared with licenses that limit some types of reuse. Their Open Data policy requires a license for reuse, and their FAIR policy requires adherence to standards maintained by FORCE11 that ensure that the shared data can be maximally discovered and reused. These three policies are great at clarifying expectations toward more FAIR data and I suspect that both TOP and the other publisher policies will take take inspiration from them.

The next point worth mentioning is that Springer Nature’s and Elsevier’s highest policies don’t quite meet TOP’s Level 3 requirements. This level of the TOP Guidelines is the most rigorous policy. This expects computational reproducibility of shared data and any relevant code that are used to reproduce the main findings and figures reported in the manuscript. This step takes time and resources but does the most to ensure credibility. This policy may be tough to implement, but we know of six journals that take this step. The American Journal of Political Science, in partnership with the Odum institute, takes these measure to ensure trust in the findings published in their journal.

These data peer review policies (Elsevier's Option E and Springer's Type 4) do, however, set good expectations. That peer review is an important and credible step. There are emerging standards for peer reviewing datasets, that are building on known practices in the field. Taking simple steps to verify that shared data are credible can increase trust. I suspect that more journals and reviewers will begin to take these actions as expectations rise for credibility and transparency in reported research.

These data peer review policies also suggest that there is room for growth within the existing TOP framework. This level of peer review clearly does not meet the level of computational reproducibility as specified in TOP Level 3, but also clearly is more than a mandate to merely share data. Verifying basic quality of shared data and giving guidance to reviewers for doing this can only improve expectations and I think should be fostered. If a new standard between TOP Level 2 and 3 would accomplish that goal, then I think it will be worth implementing.

There is room for growth is within the four publisher policies. Only Wiley's highest policy includes a mention for computational reproducibility. Note that that Wiley policy includes a spectrum of options for peer reviewing data, only the most stringent of which complies with the criteria of TOP's highest standards. Other policies included in this review do not reach TOP Level 3 standards, which are currently being implemented by a several leaders in their fields that conduct computational reproducibility checks. While I do not expect that most journals will implement computational reproducibility policies in the near future, publishers can indicate principled support for such steps and should cover the actions that their journals are already conducting. Doing so will signal principled support for these steps.

What is also clear from these policies is that most journals now have a framework to require data transparency that has a stamp of approval from their publisher. In the past, the absence of this approval has stalled progress toward individual journals implementing better policy. There are hundreds of journals that satisfy Level 1 of the TOP Guidelines, and there is no reason that number shouldn’t grow significantly from among the thousands of journals that do not. Without these signals from journals, author expectations for data transparency are not likely to shift en mass. Our mission is to help implement such practices. If you need guidance, or want to vet policies of a journal you manage contact me at david@cos.io.

Additional details of each policy can be found on each publisher's website.

6218 Georgia Avenue NW, Suite #1, Unit 3189

Washington, DC 20011

Email: contact@cos.io

Unless otherwise noted, this site is licensed under a Creative Commons Attribution 4.0 International (CC BY 4.0) License.

Responsible stewards of your support

COS has earned top recognition from Charity Navigator and Candid (formerly GuideStar) for our financial transparency and accountability to our mission. COS and the OSF were also awarded SOC2 accreditation in 2023 after an independent assessment of our security and procedures by the American Institute of CPAs (AICPA).

We invite all of our sponsors, partners, and members of the community to learn more about how our organization operates, our impact, our financial performance, and our nonprofit status.