Registered Reports is a publishing format that emphasizes the importance of the research question and the quality of methodology by conducting peer review prior to data collection. High quality protocols are then provisionally accepted for publication if the authors follow through with the registered methodology.

This format is designed to reward best practices in adhering to the hypothetico-deductive model of the scientific method. It eliminates a variety of questionable research practices, including low statistical power, selective reporting of results, and publication bias, while allowing complete flexibility to report serendipitous findings.

“Registered Reports eliminates the bias against negative results in publishing because the results are not known at the time of review."

-- Daniel Simons, Professor at University of Illinois, Urbana-Champaign, co-founding editor of Registered Replication Reports at Perspectives on Psychological Science and founding editor of Advances in Methods and Practices in Psychological Science

"Because the study is accepted in advance, the incentives for authors change from producing the most beautiful story to the most accurate one."

--Chris Chambers, Professor at Cardiff University, Section Editor for Registered Reports at Cortex, European Journal of Neuroscience and Royal Society Open Science, Chair of the Registered Reports Committee supported by the Center for Open Science

These articles provide an introduction to the Registered Reports concept: an introduction to a special issue of 15 Registered Reports in Social Psychology (Nosek & Lakens, 2014), and an introduction to Registered Reports for AIMS Neuroscience including answers to 25 common questions about Registered Reports (Chambers, Feredoes, Muthukumaraswamy, & Etchells, 2014). Chris Chambers provides a summary of how the Registered Reports initiative is making an impact in this article in Editors' Update and reflects on the progress of the format, providing a vision for the future in "What’s next for Registered Reports?"

Currently, over 300 journals use the Registered Reports publishing format either as a regular submission option or as part of a single special issue. Other journals offer some features of the format. This list will be updated regularly as new journals join the initiative.

For an article type to qualify as a registered report, the journal policy must include at least these features:

See also this table that compares the specific features of Registered Reports at different outlets.

If you are considering a Registered Reports submission but not sure how to get started, a good way to begin is to (a) read the specific author guidelines included in the list of participating journals below, (b) complete this template protocol and then (c) expand the template protocol into a full Stage 1 manuscript.

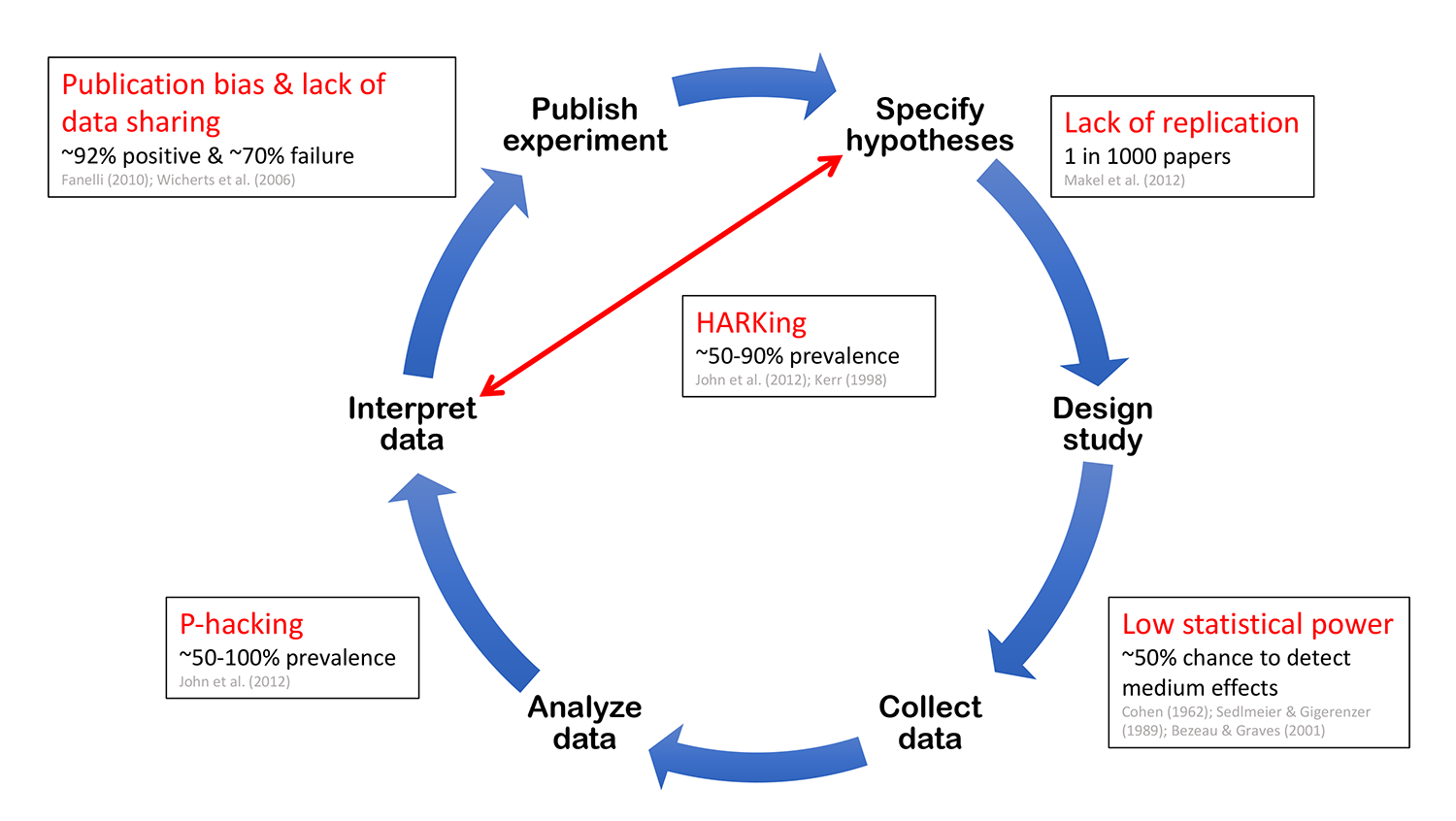

Registered Reports are a form of empirical journal article in which methods and proposed analyses are pre-registered and peer-reviewed prior to research being conducted. High quality protocols are then provisionally accepted for publication before data collection commences. This format of article is designed to reward best practice in adhering to the hypothetico-deductive model of the scientific method (see Figure 1 below). It eliminates a variety of questionable research practices, including low statistical power, selective reporting of results, and publication bias, while allowing complete flexibility to conduct exploratory (unregistered) analyses and report serendipitous findings.

Figure 1. The hypothetico-deductive model of the scientific method is short-circuited by a range of questionable research practices (red). This example shows the prevalence of such practices in psychological science. Lack of replication impedes the elimination of false discoveries and weakens the evidence base underpinning theory. Low statistical power increases the chances of missing true discoveries and reduces the likelihood that obtained positive effects are real. Exploiting researcher degrees of freedom (p-hacking) manifests in two general forms: collecting data until analyses return statistically significant effects, and selectively reporting analyses that reveal desirable outcomes. HARKing, or hypothesizing after results are known, involves generating a hypothesis from the data and then presenting it as a priori. Publication bias occurs when journals reject manuscripts on the basis that they report negative or undesirable findings. Finally, lack of data sharing prevents detailed meta-analysis and hinders the detection of data fabrication.

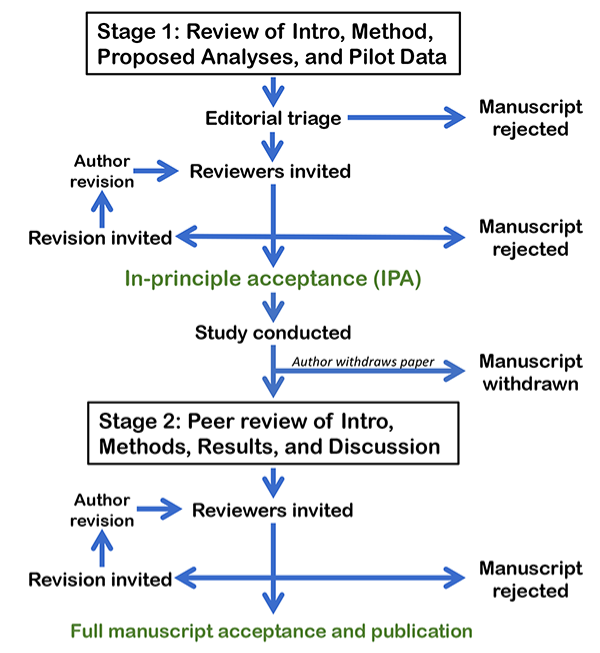

Authors of Registered Reports initially submit a Stage 1 manuscript that includes an Introduction, Methods, and the results of any pilot experiments that motivate the research proposal. Following assessment of the protocol by editors and reviewers, the manuscript can then be offered in-principle acceptance (IPA), which means that the journal virtually guarantees publication if the authors conduct the experiment in accordance with their approved protocol. With IPA in hand, the researchers then implement the experiment. Following data collection, they resubmit a Stage 2 manuscript that includes the Introduction and Methods from the original submission plus the Results and Discussion. The Results section includes the outcome of the pre-registered analyses together with any additional unregistered analyses in a separate section titled “Exploratory Analyses”. Authors are also encouraged or required to share their data on a public and freely accessible archive such as OSF or Figshare and are encouraged to share data analysis scripts. The final complete article is published after this process is complete. A published Registered Report will thus appear very similar to a standard research report but will give readers confidence that the hypotheses and main analyses are free of questionable research practices.

The Registered Reports Steering Committee is comprised of 20 members who oversee the recommended implementation policies, advise on implementation of and improvements to the format, and advocate for increased adoption. Members are invited to join by the existing committee, though external recommendations are welcome. Decisions are made by consensus.

"Funders would be making a very clever investment if they adopted and supported initiatives that promote Registered Reports."

Increase efficiency, reduce bias, and add rigor

When journals and funders partner on a Registered Report model, protocol review occurs simultaneously by journals and funders to maximize efficiency and impact. According to this mechanism, authors submit their research proposal before they have funding. Following simultaneous review by the both the funder and the journal, the strongest proposals are offered financial support by the funder and in-principle acceptance for publication by the journal.

Existing Partnerships

When journals and funders partner on a Registered Report model, protocol review occurs simultaneously by journals and funders to maximize efficiency and impact. According to this mechanism, authors submit their research proposal before they have funding. Following simultaneous review by the both the funder and the journal, the strongest proposals are offered financial support by the funder and in-principle acceptance for publication by the journal.

Available for download here: https://osf.io/gha9f/

Aside from the fact that RRs apply to both clinical and non-clinical research, the RR model moves beyond clinical trial registration in three important ways. First, RRs enjoy continuity of the review process from the initial Stage 1 submission to the final publication, thus ensuring that authors remain true to their registered protocol. This is particularly salient given that only 1 in 3 peer reviewers of clinical research compare authors’ protocols to their final submitted manuscripts (Matieu, Chan, & Ravaud, 2013). Second, in contrast to RRs, most forms of clinical trial registration (e.g. clinicaltrials.gov) do not peer review study protocols, which provides the opportunity for authors to (even unconsciously) include sufficient “wiggle room” in the methods or proposed analyses to selectively report desirable outcomes (John, Loewenstein, & Prelec, 2011) or alter a priori hypotheses after results are known (Kerr, 1998). Third, even in the limited cases where journals do review and publish trial protocols (e.g. Lancet Protocol Reviews, BMC Protocols, Trials), none of these formats provides any guarantee that the journal will publish the final outcome. These features of the RR model ensure that it represents a substantial innovation over and above existing systems of clinical trial pre-registration.

There are many differences between these types of review. The level of detail in the assessment of RRs differs at a scalar level from grants – a funding application typically includes only a general or approximate description of the methods to be employed, whereas a Stage 1 RR includes a step-by-step account of the experimental procedures and analysis plan. Furthermore, since researchers frequently deviate from their funded protocols, which themselves are rarely published, the suitability of successful funding applications as registered protocols is extremely limited. Finally, RRs are intended to provide an option that is suitable for all applicable research, not only studies that are supported by grant funding.

We believe this criticism is misguided. Some scientists may well believe that the hypothetico-deductive model is the wrong way to frame science, but if so, why do they routinely publish articles that test hypotheses and report p values? Those who oppose the hypothetico-deductive model are not raising a specific argument against RRs – they are criticising the fundamental way research is taught and published in the life sciences. We are agnostic about such concerns and simply note that the RR model aligns the way science is taught with the way it is published.

Contrary to what some critics have suggested, the RR model has never been proposed as a “panacea” for all fields of science or all sub-disciplines within fields. On the contrary we have emphasised that “pre-registration doesn't fit all forms of science, and it isn't a cure-all for scientific publishing.” Furthermore, to suggest that RRs are invalid because they don't solve all problems is to fall prey to the perfect solution fallacy in which a useful partial solution is discarded in favour of a non-existent complete solution. Some scientists have further prompted us to explain which types of research the RR model applies to and which it does not. Ultimately such decisions are for the scientific community to reach as a whole, but we believe that the RR model is appropriate for any area of hypothesis-driven science that suffers from publication bias, p-hacking, HARKing, low statistical power, or a lack of direct replication. If none of these problems exist or the approach taken isn’t hypothesis-driven then the RR model need not apply because nothing is gained by pre-registration.

Statistical power analysis requires prior estimation of expected effect sizes. Because our research culture emphasizes the need for novelty of both methods and results, it is understandable that researchers may sometimes feel there is no appropriate precedent for their particular choice of methodology. In such cases, however, a plausible effect size can usually be gleaned from related prior work. After all, it is rarely the case that experimental designs are truly unique and exist in complete isolation. Even when the expected effect size is inestimable, the RR model welcomes the inclusion of pilot results in Stage 1 submissions to establish probable effect sizes for subsequent pre-registered experimentation. Where expected effect sizes cannot be estimated and authors have no pilot data, a minimal effect size of theoretical interest can be still used to determine a priori power. Authors can also adopt NHST approaches with corrected peeking or Bayesian methods that specify a prior distribution of possible effect sizes rather than a single point estimate. Interestingly, in informal discussions, some researchers – particularly in neuroimaging – have responded to concerns about power on the grounds that they do not care about the size of an effect, only whether or not an effect is present. Those who advance such positions should beware that if the effect under scrutiny has no lower bound of theoretical importance then the experimental hypothesis (H1) becomes unfalsifiable, regardless of power.

It is true that RRs are not suitable for underpowered experiments. But underpowered experiments themselves are detrimental to science, dragging entire fields down blind alleys and limiting the potential for reproducibility. We would argue that if a particular research field systematically fails to reach the standards of statistical power set by RRs then this is not “unfair” but rather a deep problem within that field that needs to be addressed. One solution is to combine resources across research teams to increase power, such as the highly successful IMAGEN fMRI consortium.

Pre-registration does not require every step of an analysis to be specified or “hardwired”; instead, in such cases where the analysis decision is contingent on some aspect of the data itself then the pre-registration simply requires the decision tree to be specified (e.g. “If A is observed then we will adopt analysis A1 but if B is observed then we will adopt analysis B1”). Authors can thus pre-register the contingencies and rules that underpin future analysis decisions. It bears reiterating that not all analyses need to be pre-registered – authors are welcome to report the outcome of exploratory tests for which specific steps or contingencies could not be determined in advance of data collection.

No. The RR model welcomes sequential registrations in which authors add experiments at Stage 1 via an iterative mechanism and complete them at Stage 2. With each completed cycle, the previous accepted version of the paper is guaranteed to be published, regardless of the outcome of the next round of experimentation. Authors are also welcome to submit a Stage 1 manuscript that includes multiple parallel experiments, or a sequence of preliminary unregistered experiments at Stage 1 (including results) followed by a proposal for one or more preregistered experiments.

This is a legitimate concern. However, one way authors can address this is to design and pre-register such projects several months before they commence. Most grants include a delay between the notice of award and the start date – and this period can be several months. During this intervening period, the grant holder can prepare and submit a Stage 1 RR alongside recruiting staff or students to work on the project. Although the cover letter for RRs requires certification that the study could commence promptly, it is possible to agree a delayed commencement date with journal editors.

It takes, on average, about 9 weeks to reach a final Stage 1 editorial decision, not including the time taken for authors to revise their submissions. At Stage 2, the process is slightly faster.

Possibly. Most journals that have launched RRs have made the format available only for original data. However some journals are offering RRs for meta-analysis or secondary analysis of existing data sets. For further details, see our comparative table of RR features across journals. This table is regularly updated as the list of participating journals grows.

This is one of the most commonly voiced concerns about RRs and would be a legitimate worry if the RR model limited the reporting of study outcomes to pre-registered analyses only. However, we stress that no such constraint applies for RRs launched at any journal. To be clear, the RR model places no restrictions on the reporting of unregistered exploratory analyses – it simply requires that the Results section of the final article distinguishes those analyses that were pre-registered and confirmatory from those that were post hoc and exploratory. Ensuring a clear separation between confirmatory hypothesis testing and exploratory analysis is vital for preserving the evidential value of both forms of enquiry. In contrast to what several critics have suggested, RRs will not hinder the reporting of unexpected or serendipitous findings. On the contrary the RR model will protect such observations from publication bias. Editorial decisions for RRs are made independently of whether the results support the pre-registered hypotheses; therefore Stage 2 manuscripts cannot be rejected because editors or reviewers find the outcomes of hypothesis testing to be surprising, counterintuitive, or unappealing. This stands in contrast to conventional peer review, where editorial decisions are routinely based on the extent to which results conform to prior expectations or desires.

This need not be the case. It bears reiterating that the RR model does not prevent or hinder exploration – it simply enables readers to distinguish confirmatory hypothesis testing from exploratory analysis. Under the conventional publishing system, scientists are pressured to engage in QRPs in order to present exploration as confirmation (e.g. HARKing). Some researchers may even apply null hypothesis significance testing in situations where it is not appropriate because there is no a priori hypothesis to be tested. Distinguishing confirmation from exploration can only disadvantage scientists who rely on exploratory approaches but, at the same time, feel entitled to present them as confirmatory. We believe this concern reflects a deeper problem that some sciences do not adequately value exploratory, non-hypothesis driven research. Rather than threatening to devalue exploratory research, the RR model is the first step toward liberating it from this framework and increasing its traction. Once the boundaries between confirmation and exploration are made clear we can be free to develop a format of publication that is dedicated solely to reporting exploratory and observational studies. For instance, following the launch of RRs, the journal Cortex is now poised to trial a complementary “Exploratory Reports” format. Rather than seeing RRs as a threat to exploration, scientists would do better to build reforms that highlight the benefits of purely exploratory research.

Aside from the fact that RRs apply to both clinical and non-clinical research, the RR model moves beyond clinical trial registration in three important ways. First, RRs enjoy continuity of the review process from the initial Stage 1 submission to the final publication, thus ensuring that authors remain true to their registered protocol. This is particularly salient given that only 1 in 3 peer reviewers of clinical research compare authors’ protocols to their final submitted manuscripts (Matieu, Chan, & Ravaud, 2013). Second, in contrast to RRs, most forms of clinical trial registration (e.g. clinicaltrials.gov) do not peer review study protocols, which provides the opportunity for authors to (even unconsciously) include sufficient “wiggle room” in the methods or proposed analyses to selectively report desirable outcomes (John, Loewenstein, & Prelec, 2011) or alter a priori hypotheses after results are known (Kerr, 1998). Third, even in the limited cases where journals do review and publish trial protocols (e.g. Lancet Protocol Reviews, BMC Protocols, Trials), none of these formats provides any guarantee that the journal will publish the final outcome. These features of the RR model ensure that it represents a substantial innovation over and above existing systems of clinical trial pre-registration.

We appreciate this concern, which will not be settled until scientists either dispel the myth that journal hierarchy reflects quality or the most prestigious journals offer RRs. The RR model is spreading quickly to many journals, and is already offered at prominent journals such as Nature Human Behaviour. As the next continues to widen, the are numerous rewards for junior scientists who choose to submit RRs. First, because RRs are immune to publication bias they ensure that high quality science is published regardless of the outcome. This means that a PhD student could publish every high quality experiment from their PhD rather than selectively publishing the studies that yielded positive results. Second, a PhD student who submits RRs has the opportunity to gain in-principle acceptance for several papers before even submitting their PhD, which in the stiff competition for post-doctoral jobs may provide an edge over graduates with fewer deliverables to show. Third, because RRs neutralise various questionable research practices, such as p-hacking, HARKing and low statistical power, it is likely that the findings they contain will be more reproducible, on average, than those in comparable unregistered articles. This, in turn, will help build the reputations of the participating scientists as trusted generators of knowledge. The incentive structures in many sciences are evolving quickly in favour of reproducibility.

For studies that employ null hypothesis significance testing (NHST), adequate statistical power is crucial for interpreting all findings, whether positive or negative. Low power not only increases the chances of missing genuine effects; it also reduces the likelihood that statistically significant effects are genuine. To address both concerns, RRs that include NHST-based analyses must include a priori power of ≥90% for all tests of the proposed hypotheses. Ensuring high statistical power increases the credibility of all findings, regardless of whether they are clearly positive, clearly negative or inconclusive. It is of course the case that statistical non-significance, regardless of power, can never be taken as direct support for the null hypothesis. However this reflects a fundamental limitation of NHST rather than a shortcoming of the RR model. Authors wishing to estimate the likelihood of any given hypothesis being true, whether H0 or H1, should adopt alternative Bayesian inferential methods as part of their RR submissions.

Structured review criteria mean that reviewers must find concrete, quality-derived reasons for arguing that a Stage 1 submission is flawed. Author reputation is not among them. To provide further assurance, the RR format at some journals, such as AIMS Neuroscience, are employing masked review in which the reviewers are blinded as much as possible to the identity of the authors.

Stage 1 submissions generally require the inclusion of outcome-neutral conditions for ensuring that the proposed methods are capable of testing the stated hypotheses. These might include positive control conditions, manipulation checks, and other standard benchmarks such as the absence of floor and ceiling effects. Manuscripts that fail to specify these criteria will generally not be offered in-principle acceptance, and Stage 2 submissions that fail any critical outcome-neutral tests can be rejected for publication.

It is true that peer review under the RR model is more thorough than conventional manuscript review. However, critics who raise this point overlook a major shortcoming of the conventional review process: the fact that manuscripts are often rejected sequentially by multiple journals, passing through many reviewers before finding a home. Under the RR model, at least two of the problems that lead to such systematic rejection, and thus additional load on reviewers, are circumvented. First, editors and reviewers of Stage 1 submissions have the opportunity to help authors correct methodological flaws before they occur by assessing the experimental design prior to data collection. Second, because RRs cannot be rejected based on the perceived importance of the results, the RR model avoids a common reason for conventional rejection: that the results are not considered sufficiently novel or groundbreaking. We believe the overall reviewer workload under the RR model will be similar to conventional publishing. Consider a case where a conventional manuscript is submitted sequentially to four journals, and the first three journals reject it following 3 reviews each. The fourth journal accepts the manuscript after 3 reviews and 3 re-reviews. In total the manuscript will have been seen by up to 12 reviewers and gone through 15 rounds of review. Now consider what might have happened if the study had been submitted prior to data collection as a Stage 1 RR, assessed by 3 reviewers. Even if it passes through three rounds of Stage 1 review plus two rounds of Stage 2 review, the overall reviewer burden (15 rounds) is the same as the conventional model (15 rounds).

The amount of work required to prepare an RR is similar to conventional manuscript preparation; the key difference is that much of the work is done before, rather than after, data collection. The fact that researchers in some fields decide their hypotheses and analysis strategies after inspecting data doesn’t change the fact that these decisions need to be made. And the reward for thinking through these decisions in advance, rather than at the end, is that in-principle acceptance virtually guarantees a publication.

This is a legitimate concern. An ideal strategy, where possible, is to build in minor procedural flexibility when applying for ethical approval. The RR editorial teams at some journals are happy to provide letters of support for authors seeking to amend ethics approval following Stage 1 peer review. This flowchart can help authors decide whether to submit their Stage 1 protocols for peer review before or after obtaining ethics approval.

Under the current RR model this is not possible without committing fraud. When authors submit a Stage 2 manuscript it must be accompanied by a basic laboratory log indicating the range of dates during which data collection took place together with a certification from all authors that no data was collected prior to the date of in-principle acceptance (other than pilot data included in the Stage 1 submission). Time-stamped raw data files generated by the pre-registered study must also be shared publicly, with the time-stamps post-dating in-principle acceptance. Submitting a Stage 1 protocol for a completed study would therefore constitute an act of deliberate misconduct. Beyond these measures, fraudulent pre-registration would backfire for authors because editors are likely to require changes to the proposed experimental procedures following Stage 1 review. Even minor changes to the protocol would of course be impossible if the experiment had already been conducted, and would therefore defeat the purpose of pre-registration. Unless authors were willing to engage in further dishonesty about what their experimental procedures involved, “pre-registering” a completed study would be a highly ineffective publication strategy. It bears mention that no publishing mechanism, least of all the status quo, can protect science against complex and premeditated acts of fraud. By requiring certification and data sharing, the RR model closes an obvious loophole that opportunistic researchers might exploit where doing so didn’t require the commission of outright fraud. But what RRs achieve, above all, is to incentivise adherence to the hypothetico-deductive model of the scientific method by eliminating the pressure to massage data, reinvent hypotheses, or behave dishonestly in the first place.

For this criticism to be valid, scientists would need to be motivated solely by the act of publishing, with no desire to make true discoveries or to build a coherent body of research findings across multiple publications. We are more optimistic about the motivations of the scientific community, but nevertheless, it is important to note that running a pre-registered study carelessly would also sabotage the outcome-neutral tests that are necessary for final acceptance of the Stage 2 submission.

Some scientists have argued out that the RR model could overburden the peer review mechanism if authors were to deliberately submit more protocols than they could carry out. As one critic put it: “Pre-registration sets up a strong incentive to submit as many ideas/experiments as possible to as many high impact factor journals as possible.” Armed with in-principle acceptance, the researcher could then prepare grant applications to support only the successful protocols, discarding the rejected ones. Were such a strategy to be widely adopted it could indeed overwhelm peer review. However, this problem does not apply to the RR model at any of the journals where the format has been launched. All Stage 1 submissions must include a cover letter stating that all necessary support (e.g. funding, facilities) and approvals (e.g. ethics) are in place and that the researchers could start promptly following in-principle acceptance. Since these guarantees could not be made for unsupported proposals, this concern is moot.

Nothing. Contrary to some concerns, authors are free to withdraw their manuscript at any time and are not “locked” into publishing with the journal that reviews the Stage 1 submission. If the withdrawal happens after in-principle acceptance has been awarded, many journals will simply publish a Withdrawn Registration that includes the abstract from the Stage 1 submission plus a brief explanation for the withdrawal. At several journals, authors must agree to the publication of a Withdrawn Registration should the need arise.

This is an understandable concern but highly unlikely. Only a small group of individuals will know about Stage 1 submissions, including the editors plus a small set of reviewers; and the information in Stage 1 submissions is not published until the study is completed. It is also noteworthy that once in-principle acceptance is awarded, the journal cannot reject the final Stage 2 submission because similar work was published elsewhere in the meantime. Therefore, even in the unlikely event of a reviewer rushing to complete a pre-registered design ahead of the authors, such a strategy would confer little career advantage for the perpetrator (especially because the ‘manuscript received’ date in the final published RR refers to the initial Stage 1 submission date and so will predate the ‘manuscript received’ date of any standard submission published by a competitor). Concerns about being scooped do not stop researchers applying for grant funding or presenting ideas at conferences, both of which involve releasing ideas to a larger group of potential competitors than would typically see a Stage 1 RR.

RRs promise to improve the reproducibility of hypothesis-driven science in any field where one or more of the following problems apply: Publication bias: journals selectively publishing results that are statistically significant or otherwise attractive. p-hacking: in studies where the conclusions depend on inferential statistics, researchers selectively reporting analyses that were statistically significant. HARKing – “hypothesizing after results are known”: researchers presenting a hypothesis derived from the data as though it was a priori. Low statistical power: researchers failing to ensure sufficient sample size to detect a real effect. Inadequate power not only reduces the chances of detecting genuine effects; it also reduces the chances that observed positive effects are genuine. Lack of direct replication: insufficient numbers of studies seeking to establish reproducibility by repeating the methods of previous experiments as closely as possible. The RR format minimizes these problems and it also requires authors to share their study data upon publication. Therefore, if your journal publishes any articles within any sub-specialty that is exposed to any of these problems then your journal stands to benefit from RRs. Some sub-specialties within particular journals may be more compatible with RRs than others – this is why we recommend RRs as a new option for authors rather than being imposed as a mandatory or universal requirement.

No. We recommend offering RRs as a new option for authors who are willing to pre-register their designs in return for provisional article acceptance. There is no suggestion that RRs should be mandatory or universal in science.

The first step is to make sure that all decision-makers have read the detailed guidelines and requirements for RRs because the majority of objections are based on simple misunderstandings. A generic example of these guidelines can be found here. In anticipation of discussing the initiative among your editorial board, we also recommend circulating the FAQs on this web site. Please do notify us if a critical issue arises that prevents your journal from adopting RRs. We welcome feedback and will be happy to address any new concerns or objections on this page. In cases where there is editorial resistance, compromise options are to pilot RRs within a specific sub-discipline or to trial it as part of a special issue of the journal.

No. Although some journals have launched the format specifically for replications, the format is applicable for both novel experimental designs and replications. The journals Cortex and Drug and Alcohol Dependence are examples of outlets that have invited the format for both high-value replications and novel studies.

The weight of evidence suggests that journal impact factor (JIF) does not predict the impact of individual articles; however we appreciate the practical reality that JIF nevertheless carries weight with many editors and publishers. Emerging evidence suggests that RRs are, on average, cited above the JIF of the journal in which they are published; and there are sound logical reasons for believing that RRs will consistently produce more citations and in fact raise the JIF of outlets that adopt them. First, by requiring data archiving and assuring readers that research outcomes were not produced by p-hacking or HARKing, the credibility of the conclusions in RRs will, on average, be greater than for standard articles. Second, by requiring high statistical power (in some cases more than double the discipline average), the replicability of all outcomes – whether positive or negative – will be among the highest in science. Third, journals are free to exercise full editorial control over the pre-selection of RRs that address the most important and timely scientific questions, that offer the most innovative and intelligent methods to tackle them, and which safeguard feasibility. Therefore, there is little reason for journals to fear the consequences of RRs for JIF. If anything, journals that decide not to offer RRs may fall behind their competitors.

The time spent by an RR in the editorial process will depend on the duration of Stage 1 review, the time taken by the researchers to complete the pre-registered study, and the duration of Stage 2 review. For this reason, the handling time will exceed that of standard submissions, and because publishers often track the performance of journals via these handling times, it is true that RRs could generate a false signal of poor operational efficiency. There are two solutions to this problem. The first and most obvious fix is to simply disregard the “study time” (i.e. time between in-principle acceptance and the receipt of the Stage 2 manuscript) from the journal statistics. If this is not feasible then the second approach, currently adopted at several journals, is to treat in-principle acceptance as a technical rejection. Authors of provisionally accepted Stage 1 manuscripts are of course informed that this is a rejection in name only, and the subsequent Stage 2 submission is treated as a linked resubmission. This solution is compatible with the existing infrastructure at most journals and avoids the inclusion of the study time in the journal statistics.

This is straightforward. The peer review process for RRs differs in two ways from conventional review, both of which can be dovetailed with existing systems. First, unlike standard submissions, RRs have two distinct stages of review – one before data collection and one afterward. This can be integrated into existing software by simply treating each stage as a new submission, linked by a journal editorial assistant, and by treating in-principle acceptance as a technical “rejection”. Second, the review process for RRs at several journals is structured: reviewers are asked to assess the extent to which the manuscript meets a number of fixed criteria. Even if your handling software is unable to implement a structured review mechanism in which reviewers enter text into pre-defined fields, the criteria can be easily incorporated into the reviewer invitation letters. We have found that this works adequately provided the attention of reviewers is drawn specifically to these criteria. Generic templates of reviewer invitation letters and editorial decision letters can be downloaded from our Resources for Editors page (see tabs above). Once adapted to your specific requirements, the technical staff at your publisher should be able to add them to the system in a matter of days. At Cortex, for example, the necessary amendments to the Elsevier Editorial System were implemented in less then a week by a single member of the publishing staff.

It is not difficult at all. Even though the review process may seem unconventional, we have found that reviewers quickly adjust to the requirements. To help streamline implementation, our Resources for Editors tab provides generic templates of reviewer invitation letters and editorial decision letters. If there are any other resources you feel would be useful, please contact us and we will add them to the resources page. We are also happy to provide individual consultation and assistance.

No. The journal can apply great stringency during Stage 1 review to ensure that provisional acceptance is restricted to those studies judged to be the most likely to produce outcomes that are informative and important to the field.

No. The Stage 1 review process allows reviewers and editors to pre-specify positive controls, manipulation checks or other standards for assessing study quality (e.g. data verifying that a particular intervention or measure was administered appropriately). To prevent publication bias, the only requirement is that such tests are pre-specified at Stage 1 before results are known, and that they are independent of the primary outcome measures.

For example, at Royal Society Open Science, Stage 1 Criterion 6 asks reviewers to assess "Whether the authors have considered sufficient outcome-neutral conditions (e.g. absence of floor or ceiling effects; positive controls; other quality checks) for ensuring that the results obtained are able to test the stated hypotheses." For manuscripts that are provisionally accepted and then resubmitted with the results (Stage 2), reviewers are then asked to assess "Whether the data are able to test the authors’ proposed hypotheses by passing the approved outcome-neutral criteria (such as absence of floor and ceiling effects or success of positive controls or other quality checks)". Failure of a crucial quality check could thus be grounds for rejection at Stage 2.

We recommend establishing a dedicated team of associate editors to triage Stage 1 submissions before distributing them for review. Early in the life of this new format, authors may be uncertain of requirements, and for the journals that have adopted RRs so far it is not uncommon to receive submissions that require basic amendments before they can be suitable for in-depth review. For instance, the Stage 1 manuscript might not include an adequate statistical power analysis or it may lack sufficient detail in the proposed analyses. Often these oversights can be detected and corrected before seeking in-depth review. Depending on the scope of the journal, editorial boards may also wish to pre-select Stage 1 RRs that propose to address the most important or relevant research questions. At Cortex and many other journals, these responsibilities are overseen by a team of four associate editors in different sub-specialities together with a section editor who is responsible for overseeing the RR category. These requirements will differ between disciplines; in many sciences a smaller triage team would suffice.

Please see the Participanting Journals tab for a current listing, including introductory editorials as well as detailed author and reviewer guidelines in each case. You can also find a table comparing the features of different RR formats here.

Registered Reports represent an ideal workflow to present the results of confirmatory, hypothesis-driven research. However, there are other formats that reduce bias and increase transparency into this process.

Journals may conduct peer review in two stages, even when the results are already known to the authors.

In the first stage, authors will include a full manuscript for the abstract, introduction, and methods without the results and discussion sections. The submitted manuscript must not indicate information about the outcome-relevant results. The methods must contain a complete analysis plan of what is to be included in the full article. If relevant, outcome-irrelevant results can be reported to demonstrate, for example, that that experimental manipulations were effective, or outcome variables were measured reliably and conformed to distributional assumptions.

If the submission passes initial review, then the authors will submit a complete manuscript for second stage review to confirm that the final report adequately addresses reviewer concerns from the initial submission.

Journals that use Results Blind Peer Review include:

Confirmatory research is only half of the picture. Exploratory research is necessary in order to discover unexpected trends and allow for serendipitous findings. Current incentives encourage presenting exploratory findings as the result of confirmatory hypothesis tests, but journals that publish Exploratory Reports encourage more transparency into the work that led to a particular finding. See additional rationale for exploratory reports at the journal Cortex here and author guidelines here.

Journals that currently offer Exploratory Reports include:

Prereg posters are posters at academic conferences that present planned research, before data are collected or analysed. This format allows presenters to receive feedback on their theory, hypotheses, design and analyses from their colleagues (conference attendees), which is likely to improve the study. In turn, this can improve more formal preregistration, reducing the chances of subsequent deviation, and/or facilitate submission of the work as a Stage 1 Registered Report. Moreover, colleagues with shared scientific interests can become aware of the study earlier on, which can open the door to collaborations. For preliminary evidence of the benefits of prereg posters for encouraging constructive feedback, promoting open science and supporting early-career researchers, see this study by Brouwers et al. (2020).

The following conferences offer attendees the opportunity to present prereg posters:

210 Ridge McIntire Road

Suite 500

Charlottesville, VA 22903-5083

Email: contact@cos.io

Unless otherwise noted, this site is licensed under a Creative Commons Attribution 4.0 International (CC BY 4.0) License.

Responsible stewards of your support

COS has consistently earned a Guidestar rating of Platinum for its financial transparency, the highest rating available. You can see our profile on Guidestar. COS and the OSF were also awarded SOC2 accreditation in 2023 after an independent assessment of our security and procedures by the American Institute of CPA’s (AICPA).

We invite all of our sponsors, partners, and members of the community to learn more about how our organization operates, our impact, our financial performance, and our nonprofit status.